Revisiting Autofocus for Smartphone Cameras

Abdullah Abuolaim, Abhijith Punnappurath, and Michael S. Brown

Department of Electrical Engineering and Computer Science

Lassonde School of Engineering, York University, Canada

{abuolaim, pabhijith, mbrown}@eecs.yorku.ca

Abstract

Autofocus (AF) on smartphones is the process of determining how to move a camera's lens such that certain scene content is in focus. The underlying algorithms used by AF systems, such as contrast detection and phase differencing, are well established. However, determining a high-level objective regarding how to best focus a particular scene is less clear. This is evident in part by the fact that different smartphone cameras employ different AF criteria; for example, some attempt to keep items in the center in focus, others give priority to faces while others maximize the sharpness of the entire scene. The fact that different objectives exist raises the research question of whether there is a preferred objective. This becomes more interesting when AF is applied to videos of dynamic scenes. The work in this paper aims to revisit AF for smartphones within the context of temporal image data. As part of this effort, we describe the capture of a new 4D dataset that provides access to a full focal stack at each time point in a temporal sequence. Based on this dataset, we have developed a platform and associated application programming interface (API) that mimic real AF systems, restricting lens motion within the constraints of a dynamic environment and frame capture. Using our platform we evaluated several high-level focusing objectives and found interesting insight into what users prefer. We believe our new temporal focal stack dataset, AF platform, and initial user-study findings will be useful in advancing AF research.

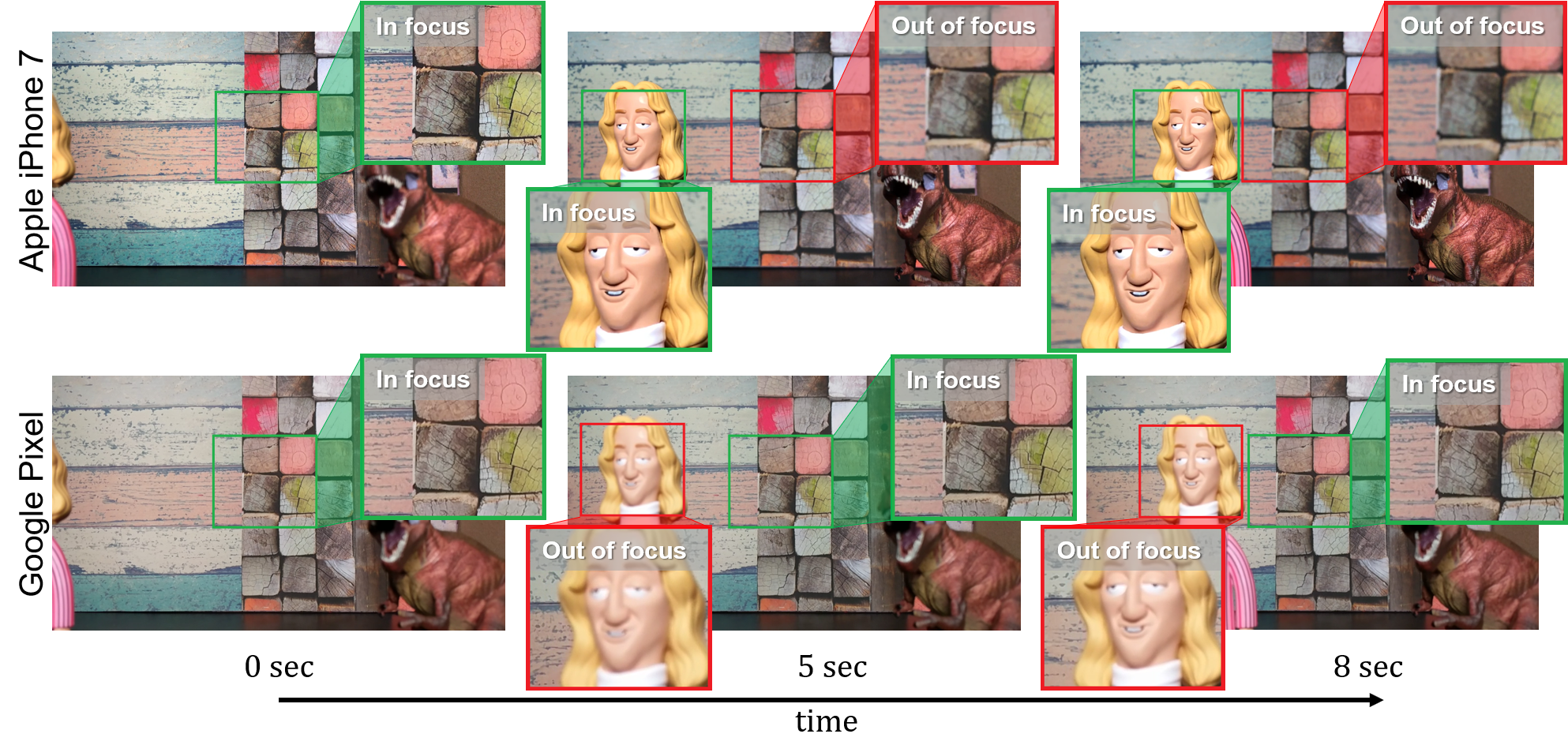

Different smartphone cameras employ different AF criteria

An Apple iPhone 7 and Google Pixel are used to capture the same dynamic scene controlled via translating stages. At different time slots in the captured video, denoted as 0 sec, 5 sec, 8 sec, it is clear that each phone is using a different AF objective. It is unclear which is the preferred AF objective. This is a challenging question to answer as it is very difficult to access a full (and repeatable) solution space for a given scene.

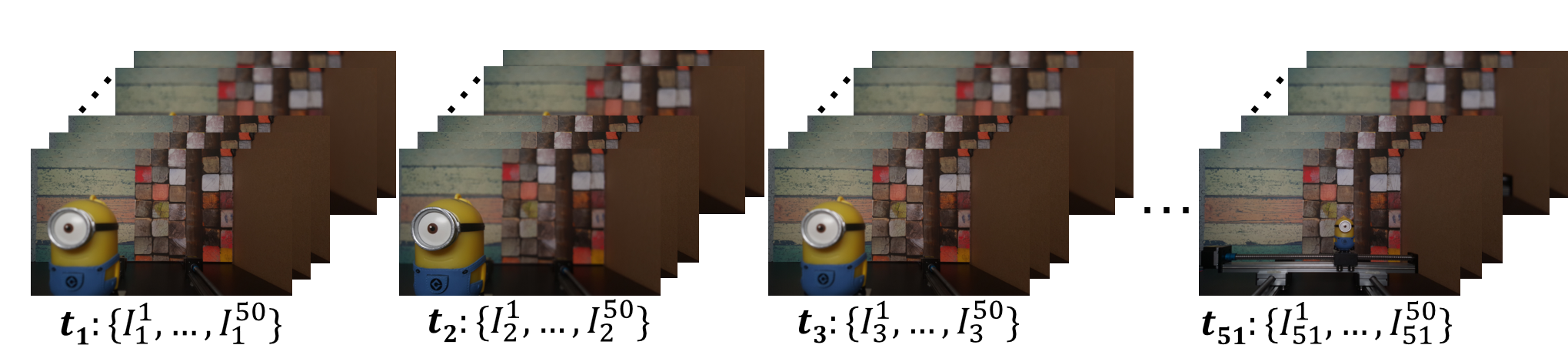

4D dataset

Example of the temporal image sequence for scene 3. Focal stacks consist of Ii1,...,Ii50 images for each time point ti.

Out of focus to in focus demo. Try it out!

Slide to compare different example images from our 4D dataset and see the difference between out of focus and in focus images.

Scene 1, time point 01

Out of focus

Out of focus

In focus

In focus

Scene 3, time point 19

Out of focus

Out of focus

In focus

In focus

Scene 6, time point 38

Out of focus

Out of focus

In focus

In focus