Defocus Deblurring Using Dual-Pixel Data

Abdullah Abuolaim1 and Michael S. Brown1,2

1York University, Toronto, Canada

2Samsung AI Center, Toronto, Canada

{abuolaim,mbrown}@eecs.yorku.ca

Abstract

Defocus blur arises in images that are captured with a shallow depth of field due to the use of a wide aperture. Correcting defocus blur is challenging because the blur is spatially varying and difficult to estimate. We propose an effective defocus deblurring method that exploits data available on dual-pixel (DP) sensors found on most modern cameras. DP sensors are used to assist a camera's auto-focus by capturing two sub-aperture views of the scene in a single image shot. The two sub-aperture images are used to calculate the appropriate lens position to focus on a particular scene region and are discarded afterwards. We introduce a deep neural network (DNN) architecture that uses these discarded sub-aperture images to reduce defocus blur. A key contribution of our effort is a carefully captured dataset of 500 scenes (2000 images) where each scene has: (i) an image with defocus blur captured at a large aperture; (ii) the two associated DP sub-aperture views; and (iii) the corresponding all-in-focus image captured with a small aperture. Our proposed DNN produces results that are significantly better than conventional single image methods in terms of both quantitative and perceptual metrics – all from data that is already available on the camera but ignored.

Presentation

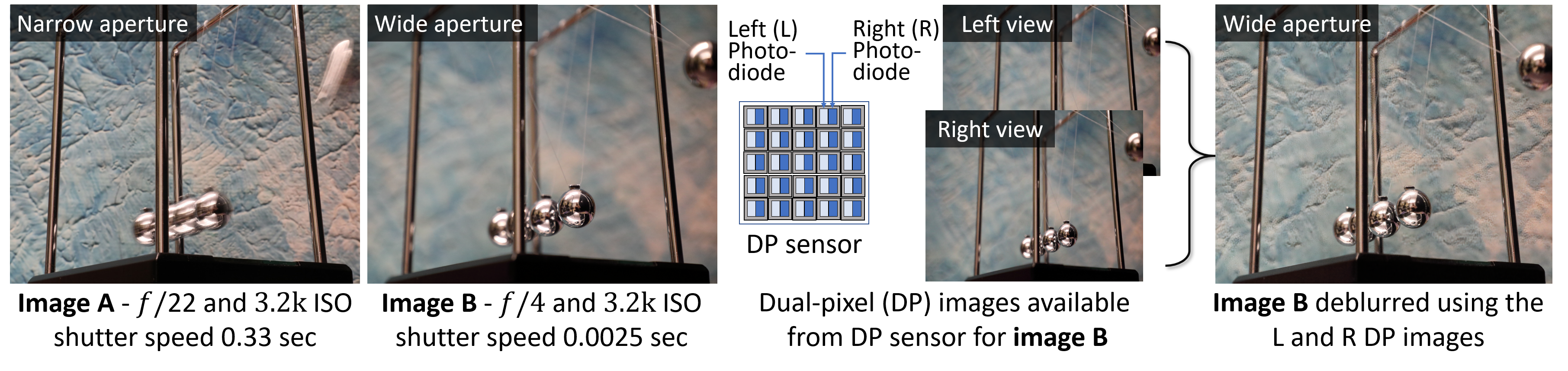

Defocus and motion blur vs. aperture size and shutter speed (i.e., sensor's exposure to light)

Images A and B are of the same scene and same approximate exposure. Image A is captured with a narrow aperture (f/22) and slow shutter speed. Image A has a wide depth of field (DoF) and little defocus blur, but exhibits motion blur from the moving object due to the long shutter speed. Image B is captured with a wide aperture (f/4) and a fast shutter speed. Image B exhibits defocus blur due to the shallow DoF, but has no motion blur. Our proposed DNN uses the two sub-aperture views from the dual-pixel sensor of image B to deblur image B, resulting in a much sharper image.

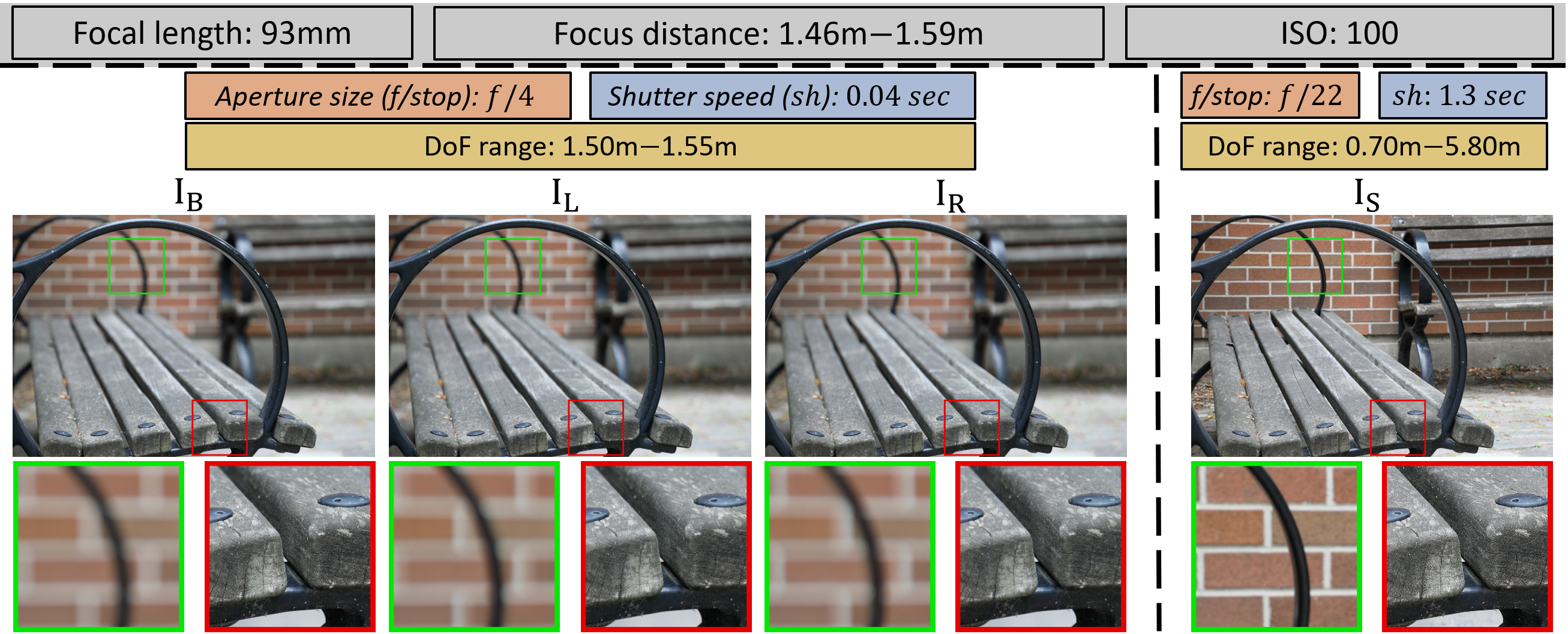

Dual-pixel defocus deblurring dataset

An example of an image pair with the camera settings used for capturing. IL and IR represent the Left and Right DP views extracted from IB. The focal length, ISO, and focus distance are fixed between the two captures of IB and IS. The aperture size is different, and hence the shutter speed and DoF are accordingly different too. In-focus and out-of-focus zoomed-in patches are extracted from each image and shown in green and red boxes, respectively.

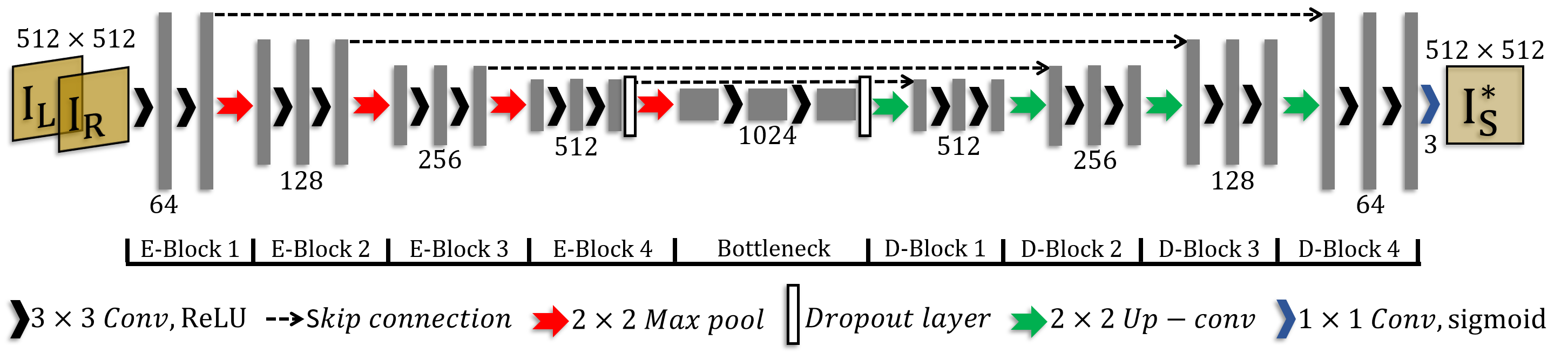

Dual-Pixel deblurring architecture (DPDNet)

Our proposed DP deblurring architecture (DPDNet). Our method utilizes the DP images, IL and IR, for predicting the sharp image I*S through three stages: encoder (E-Blocks), bottleneck, and decoder (D-Blocks). The size of the input and output layers is shown above the images. The number of output filters is shown under the convolution operations for each block.

Results demo, try it out!

Slide to see deblurring results

Canon DSLR camera, example 01

Blurred

Blurred

Deblurred

Deblurred

Canon DSLR camera, example 02

Blurred

Blurred

Deblurred

Deblurred

Canon DSLR camera, example 03

Blurred

Blurred

Deblurred

Deblurred

Canon DSLR camera, example 04

Blurred

Blurred

Deblurred

Deblurred

Pixel 4 smartphone camera, example 01

Blurred

Blurred

Deblurred

Deblurred

Pixel 4 smartphone camera, example 02

Blurred

Blurred

Deblurred

Deblurred

Related projects

Acknowledgments

A big thanks to Niloy Costa for his great photography skills in assisting in capturing the images for our dataset.

This study was funded in part by the Canada First Research Excellence Fund for the Vision: Science to Applications (VISTA) programme and an NSERC Discovery Grant. Dr. Brown contributed to this article in his personal capacity as a professor at York University. The views expressed are his own and do not necessarily represent the views of Samsung Research.